A remarkable fact shows that AI agents now automate complex workflows in more than half of today’s businesses. AI frameworks have transformed how organizations handle intelligent automation. These systems work autonomously to perform tasks, create plans, and connect with external tools that fill knowledge gaps.

Our experience shows that agentic AI frameworks build the foundation for developing autonomous systems. The choice of the best AI framework becomes crucial for success as you learn to create an AI agent. Several popular options exist. Microsoft AutoGen excels at orchestrating multiple agents in distributed environments. LangChain provides a modular architecture that manages workflows effectively. CrewAI makes shared agent systems work together for complex goals. These agentic AI frameworks come with pre-built components that support intelligent automation and include predefined workflows and live data pattern recognition.

In this piece, we’ll break down everything about AI agent frameworks and demonstrate how to build intelligent agents that deliver results for your specific use case.

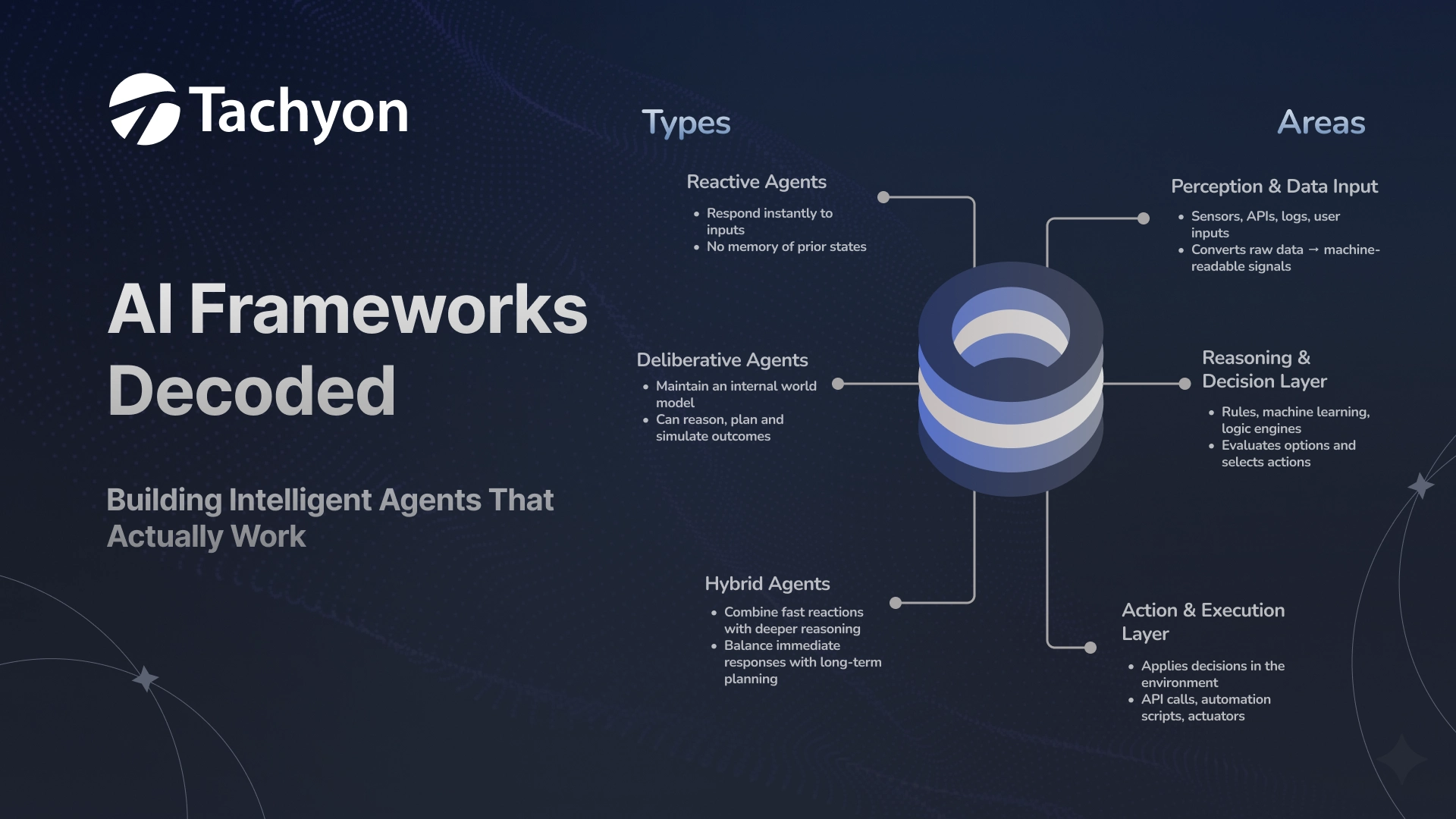

Understanding Agentic AI Frameworks

Agentic frameworks are the significant building blocks that create sophisticated AI systems with increasing autonomy levels. These frameworks go beyond simple code collections. They represent well-laid-out approaches to developing AI agents that can reason, plan and execute complex tasks with minimal human input.

What is an agentic framework?

The foundational structure to develop, deploy and manage autonomous AI systems comes from an agentic framework. It sets defined parameters and protocols that govern interactions between AI agents, whether they use large language models (LLMs) or other software types. These frameworks serve as complete blueprints that show how AI components should cooperate to reach specific goals.

The sort of thing i love about agentic frameworks are:

Predefined architecture that outlines the structure, characteristics, and capabilities of agentic AI

- Communication protocols that aid interactions between AI agents and users or other agents

- Task management systems to coordinate complex workflows

- Integration tools for function calling and accessing external resources

- Monitoring capabilities to track AI agent performance and ensure reliability

These frameworks focus beyond individual agent capabilities. They show how multiple agents can work together in a coordinated system. This orchestration layer makes complex, multi-step automations possible that isolated AI components could never achieve.

At the time to use an agentic framework vs plain LLM chains

Your task’s complexity and required autonomy level determine whether you should use an agentic framework or a simpler LLM chain. Industry experts say most “agentic systems” in production combine workflows and agents rather than using one approach.

LLM chains excel when you:

- Just need direct control over the conversation or task flow

- Have a process that follows a predictable, sequential pattern

- Want specific outcomes or behaviors

- Prioritize lower latency and cost

Agentic frameworks become vital when your application needs:

- Greater flexibility and dynamic decision-making

- Real autonomy to reason and select tools

- Adaptive responses to changing conditions

- Complex, multi-step problem solving

Anthropic suggests “When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed”. OpenAI adds “verify that your use case can meet these criteria clearly. Otherwise, a deterministic solution may be enough”.

Building reliable agentic systems faces a main challenge. The LLM must have appropriate context at each execution step. This means controlling the exact content passed to the model and executing the right steps to generate relevant information. Agentic frameworks help manage this through their structured approach to agent development.

The best AI framework for your project then depends on balancing flexibility and predictability. You should weigh factors like task complexity, required autonomy and operational constraints before choosing a specific approach.

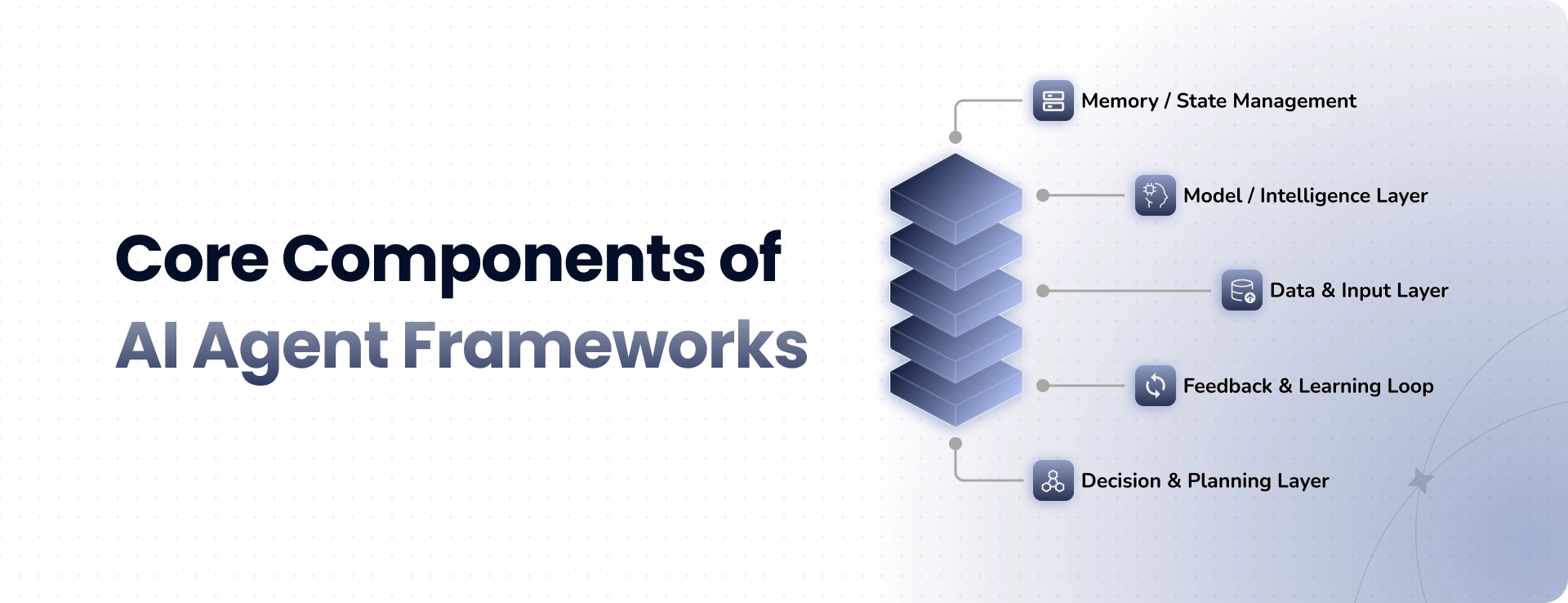

Core Components of AI Agent Frameworks

AI agents need a good grasp of core components that form their frameworks. These building blocks combine to create systems that can notice, reason, act, and learn on their own.

Agent architecture and planning logic

AI agents use a layered structure with perception, decision-making, action, and learning components. Planning logic sits at the core and helps agents create strategies to reach their goals. Modern AI frameworks use several planning approaches:

- Markov Decision Processes for decision-making under uncertainty

- Monte Carlo Tree Search for complex reasoning tasks

- Hierarchical Reinforcement Learning for multi-step execution

The decision-making layer uses rule-based logic, probabilistic models, or ML/DL models to guide how agents reason. ReAct agents use a reasoning-action loop that runs until they finish their task. This approach lets agents break down complex requests, plan actions, and adjust based on new information from their environment.

Communication protocols for multi-agent systems

AI agents need strong communication protocols to cooperate well. These protocols define how messages work between agents – their syntax, semantics, and practical use. The Model Context Protocol (MCP) and Agent-to-Agent Protocol (A2A) have become vital standards in this field.

MCP works “like a USB-C port for AI applications.” It provides a standard way to connect AI models to different data sources and tools. A2A helps agents talk across platforms, which lets agents from different vendors work together naturally.

Agents can communicate through a central system or directly with each other in a peer-to-peer setup. These protocols help multiple agents work as a team to succeed together.

Memory systems and context retention

Memory makes agents intelligent, but LLMs don’t remember things on their own—they need extra memory components. AI agent memory works much like human memory:

Short-term memory works like RAM and holds current task details, while long-term memory keeps information across many interactions. Long-term memory includes semantic memory (facts), episodic memory (experiences), and procedural memory (skills).

Systems like LangGraph and Redis offer special tools to build memory systems. These help agents keep context, learn from experience, and make better decisions over time.

Tool access and function calling

Tools help agents do more than just process language by connecting to other systems. Function calling helps LLMs know when to use specific tools and what information they need. This turns LLMs from text generators into systems that can take real actions.

Tools range from simple calculators to complex APIs for weather forecasts, database queries, or financial transactions. With function calling, agents can search the web, work with data, or use enterprise systems—making them much more useful.

Monitoring, debugging, and observability

Observability grows more important as AI agent systems evolve. It goes beyond simple monitoring to show an agent’s internal state through logs, metrics, and traces.

The GenAI observability project in OpenTelemetry aims to standardize how we track AI agents. It creates common ways to measure applications and frameworks. These standards help developers track data the same way across different systems, which makes debugging and optimization easier.

Good observability tools help teams find problems, spot hallucinations, understand decisions, and build more reliable AI systems. This becomes crucial as agents handle more complex tasks independently.

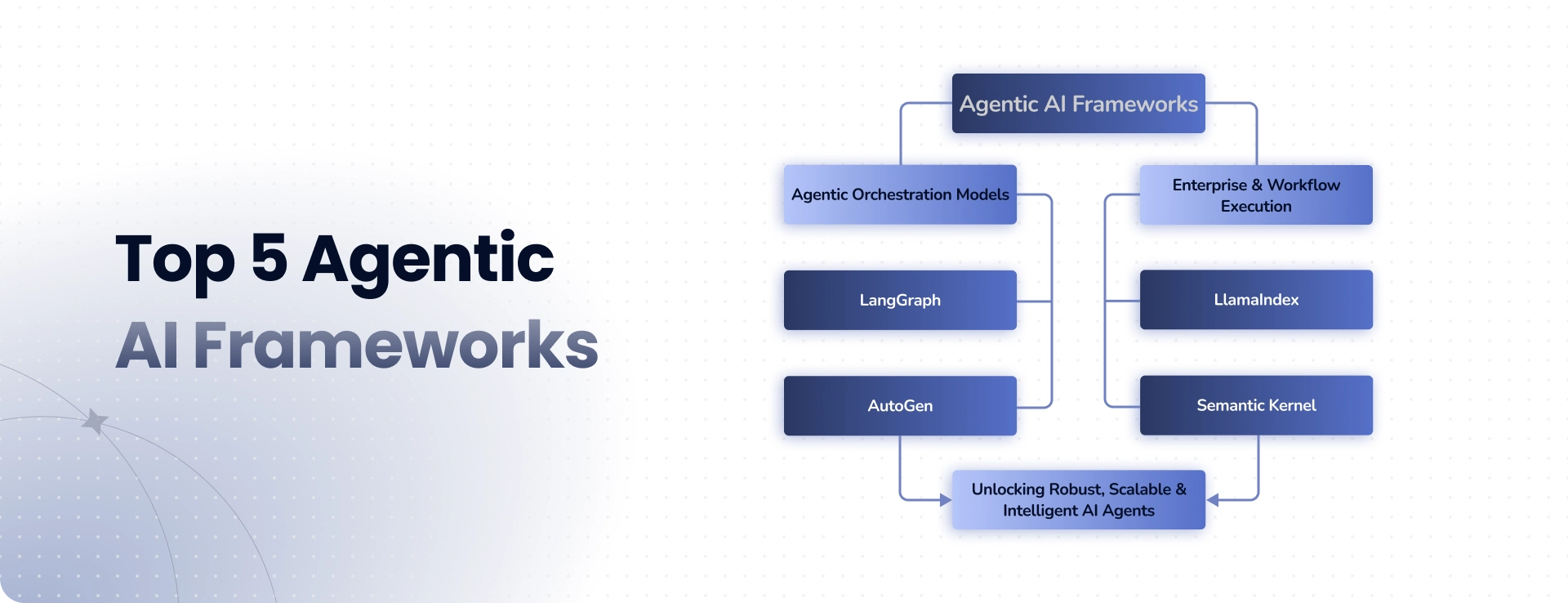

Top 5 Agentic AI Frameworks to Know

Building effective AI agents requires the right tools. The agentic AI landscape has evolved, and several frameworks now lead the pack. Each offers its own approach to agent orchestration and collaboration.

LangGraph: Graph-based orchestration for LLM agents

LangGraph builds on the LangChain ecosystem by turning agent interactions into stateful graphs. Unlike traditional LangChain chains, it treats agent steps as nodes in a directed graph. The edges control data flow between nodes. This design works well to manage complex workflows that need branching and loops.

The framework stores data automatically, which helps recover from errors. Your workflows can pick up right where they left off. It also lets humans review and approve specific steps before moving forward. This makes it valuable when you need oversight and approval steps.

LangGraph gives you the control to design reliable agents for complex tasks. It stays flexible enough to handle real world scenarios.

CrewAI: Role-based multi-agent collaboration

CrewAI organizes AI agents that work together as a “crew.” Each agent plays a specific role with defined goals and backstory. This setup helps them work together on complex tasks.

The framework supports two main processes:

- Sequential: Tasks run in a set order, and each output becomes context for the next step

- Hierarchical: A self-created manager agent handles task distribution and execution

CrewAI’s strength comes from treating AI agents like team members with different skills. This works great for tasks that need various types of expertise, from analyzing markets to creating content.

AutoGen: Event-driven multi-agent messaging

Microsoft’s open-source AutoGen focuses on agents that communicate asynchronously. It has three main layers: Core (for event-driven agents), AgentChat (for conversational AI), and Extensions (for extra features).

The event-driven design stands out. Agents can wait for external events without stopping the whole workflow. This makes AutoGen great for distributed applications that need quick responses.

The framework also works across different programming languages (currently Python and .NET). This makes it more useful for business applications.

LlamaIndex: Event-triggered agent workflows

LlamaIndex uses event-driven systems to chain multiple events in its Workflow system. Steps are marked with @step decorators that check input and output types.

You get lots of flexibility with this framework:

- Choose your start and end points

- Share context across all steps

- Wait for multiple events at once

- See how your workflow runs with built-in tools

LlamaIndex works well for RAG-enhanced agents and complex patterns. You can run tasks both synchronously and asynchronously.

Semantic Kernel: Modular goal-oriented planning

Microsoft’s Semantic Kernel helps businesses add AI to their existing processes through its Agent Framework and Process Framework. It combines AI and traditional code functions into single workflows.

The Process Framework handles long-running business tasks and works naturally with Dapr and Orleans distributed frameworks.

This framework targets enterprise needs with focus on security and compliance. It connects smoothly with Azure services. Organizations that need production-ready agent systems will find it useful.

How to Choose the Best AI Framework for Your Use Case

Picking the right AI framework needs a smart approach that weighs your needs against what different options can do. Let’s look at what matters most when making this choice.

Assessing task complexity and agent roles

Your AI agent’s task complexity should be the starting point. Simple, sequential processes work well with basic frameworks. Complex processes with conditional logic need more advanced orchestration.

You should think about:

- How much independence your agent needs (UI tasks vs complex backend work)

- Whether you need one agent or multiple agents working together

- The level of reasoning your project demands

Your specific task requirements should guide you between frameworks like CrewAI (great for team-based agents working together) or LangGraph (better suited for complex workflows that branch out).

Evaluating integration with existing systems

A smooth fit with your current tech stack is vital for easy adoption. Your assessment should cover how each framework fits with:

- Your data sources and infrastructure

- Current APIs and services

- Where you’ll run it (on-premises or cloud)

Some frameworks stand out here. Semantic Kernel gives you stronger enterprise integration options, while others might be better at specific connections.

Security, compliance, and data privacy considerations

AI agents often work with sensitive data, so security is non-negotiable. Each framework needs these checks:

- How it encrypts data, both moving and stored

- Ways to control who gets access

- How it follows rules like GDPR or CCPA

Companies today manage anywhere from 150 to 700 applications. Strong security across all connection points matters more than ever.

Performance and scalability measures

Hard numbers show what a framework can really do. You need to assess:

- How fast it responds in immediate applications

- Performance under different loads

- How much resources it uses

- What happens when it handles big data volumes

Start with small test runs before going all in. This lets you see how each framework handles your specific cases and data.

Building and Scaling Intelligent Agents

The building of functional AI agents starts after you pick the right framework. Your path from concept to production needs smart implementation, testing, and scaling strategies.

How to create an AI agent using LangGraph or CrewAI

Each framework has its own way of implementing your first agent. LangGraph lets you build a graph-based workflow where nodes represent specific functions or LLM calls:

- Define your state structure to track conversation and agent context

- Create node functions that process inputs and produce outputs

- Connect nodes with edges to establish workflow pathways

- Add conditional logic for dynamic behavior

A simple LangGraph agent might include nodes that receive user input, process with an LLM, call external tools, and generate responses.

CrewAI takes a different approach with its role-based design that focuses on specialized experts:

researcher = Agent(

role=”Research Specialist”,

goal=”Uncover relevant information”,

backstory=”You’re a seasoned researcher with attention to detail”

)

Each agent gets specific tasks with clear descriptions and expected outputs.

Testing and iterating with LangSmith or AutoGen Studio

Good testing prevents things from getting pricey when they fail. LangSmith gives you several ways to test:

- Final response evaluation: Compares agent outputs against reference responses

- Trajectory evaluation: Assesses the sequence of steps taken

- Single-step evaluation: Analyzes individual decision points

AutoGen Studio makes rapid prototyping possible without much coding. You can watch agent interactions through its user-friendly terminal application with built-in monitoring tools.

Scaling multi-agent systems in production environments

Production brings its own set of challenges. Memory management comes first – you need both short-term memory for conversations and long-term memory for knowledge that stays. Tools like Zep work with frameworks to handle long-term memory needs.

Your deployment architecture needs careful planning. You can choose from:

- Distributed service-oriented architecture where agents run as independent microservices

- Centralized orchestration with a control plane managing communication

- Hybrid approaches that balance autonomy and oversight

A reliable monitoring system should track costs, latency, success rates, and error patterns at system, agent, and tool levels.

Conclusion: Choosing the Right AI Agent Framework

AI agent frameworks have revolutionized how businesses handle automation and intelligent systems. In this piece, we looked at the essential building blocks that make these frameworks powerful tools for creating autonomous AI systems. Of course, picking between LangGraph, CrewAI, AutoGen, LlamaIndex, and Semantic Kernel depends by a lot on your specific requirements and goals.

Your experience of building AI agents that work starts with understanding the core components – architecture, communication protocols, memory systems, tool access, and monitoring capabilities. These elements combine to create systems that notice, reason, and act on their own while staying reliable and performing well.

AI agent implementation works best when kept simple rather than complex. As mentioned before, most production systems mix workflows and agents instead of using purely agentic approaches. This balanced approach helps you retain control while getting the benefits of AI autonomy.

Testing and iteration play key roles in agent development. Tools like LangSmith and AutoGen Studio help evaluate performance before production deployment. It also helps to have proper scaling strategies so your multi-agent systems can handle real-life demands efficiently.

What a world of agentic AI frameworks looks bright as standardization efforts like Model Context Protocol and OpenTelemetry grow stronger. These advances will make agent development more available and reliable for organizations of every size.

The best AI implementations come from smart framework choices and careful setup. Take time to evaluate your specific needs, existing infrastructure, and security requirements before picking any framework. Even the most sophisticated framework only adds value when it matches your business’s goals and technical limits.

Build Smarter AI Agents with Tachyon

Learn how to design and scale intelligent agents that deliver real business impact. Our experts help you choose and implement the right AI frameworks for automation that actually works.